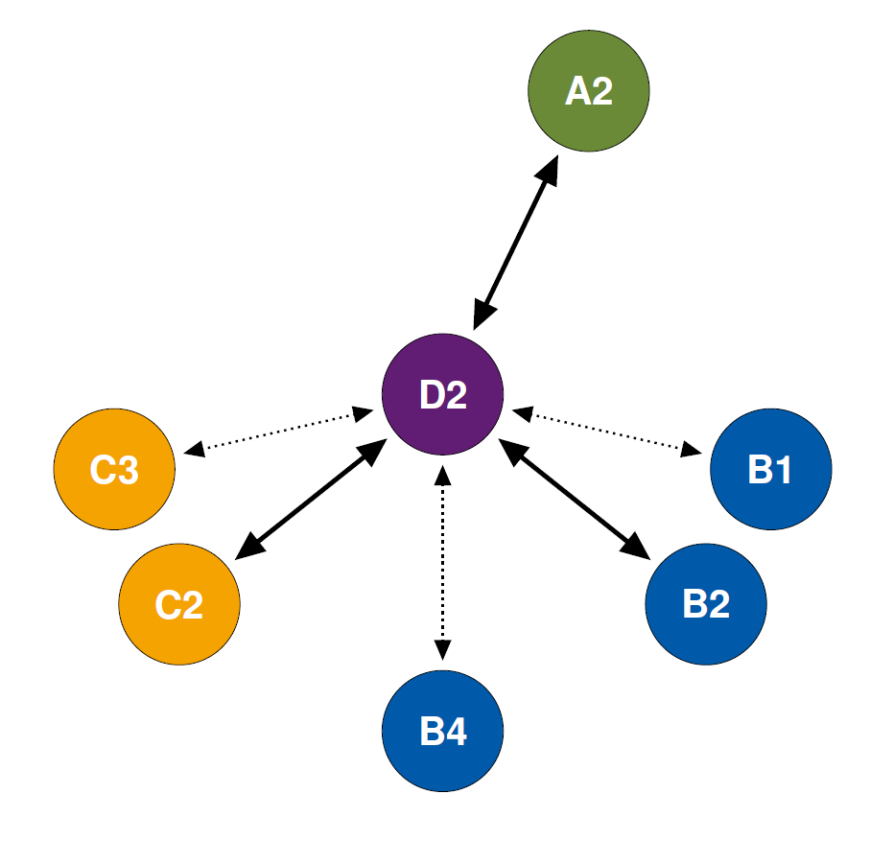

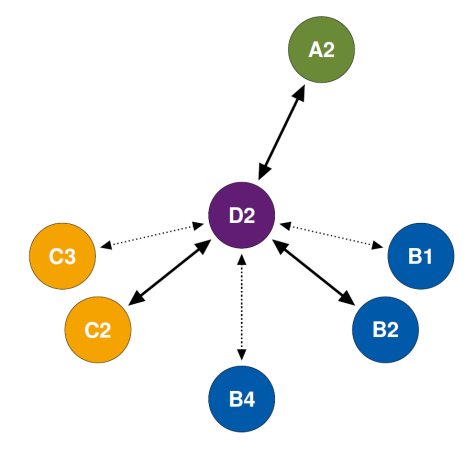

The focus of D2 is therefore on programmable switches and programmable network cards (referred to as INP platforms in the following), which enable application functions to be executed directly in the network hardware. However, the currently available INP platforms still have significant limitations: (1) Application logic vs. performance: Currently, different approaches exist for INP platforms. On the one hand, there are approaches such as the Barefoot Tofino, which can execute INP functions at high line rates of up to 100 Gbps Ethernet, but only offer limited programming using P4. On the other hand, INP platforms exist that offer general programming capability using languages such as Micro C, but often achieve limited line rates substantially below 100 Gbps. (2) Distributed execution and multi-tenancy: An important application area for INP platforms is cloud data centers. In today's cloud data centers, network switches (and cards) typically form a complex network topology, with network hardware shared by multiple tenants. However, these two aspects (distributed execution and multi-tenancy) are not, or only in a very limited fashion, supported by today's INP platform. (3) Quality of service guarantees: Current INP platforms offer applications only limited ways to specify quality of service guarantees when executing INP functions. This makes it difficult for INP to support mission-critical requirements.

Therefore, within the scope of D2, a new hardware architecture for INP platforms will be explored that uses FPGA-based reconfigurable hardware accelerators. This will not only allow more complex application functions, such as iterative computations, to be efficiently realized, but also address the aforementioned limitations. For example, the FPGA-based INP platform should enable even complex INP functions to be executed at high performance rates of up to 100 Gbps. The new FPGA-based INP platform will be evaluated in D2 using different scenarios. The focus will be on using the INP platform to accelerate typical applications within cloud data centers (e.g., distributed AI and Big Data systems such as Spark or Tensorflow), for which efficient use of INP promises significant benefits. However, the hardware technology emerging in D2 is also expected to enable broader deployment beyond cloud data centers (e.g., for edge scenarios in C2), which is why it is made available for use throughout MAKI as part of the extended INP testbed.

The hardware-accelerated INP platform developed as part of the initial start-up funding can be used as an INP switch, or as an INP-capable network card. However, the prototype INP platform does not yet have hardware transitions to dynamically adapt the INP functionality to different applications. This capability is useful not only to support new applications during ongoing network operation, but also to dynamically distribute the INP functions to multiple switches or to adapt the hardware resources to the required quality-of-service guarantees. Therefore, in Phase III we are developing a transition-capable hardware-accelerated INP platform – based on the initial results from Phase II. A major focus of D2 is how to make hardware-accelerated INP functionality accessible to application experts without deep expertise in FPGA design. To this end, a programming language will be researched in D2 that enables application experts to specify even more complex INP functions. D2 is also aiming for suitable programming tools such as compilers, which automatically can construct the transition logic at the hardware level from these descriptions.

Subproject D2 will therefore make significant contributions to MAKI on the automatic construction of transition logic for adapting the network down to the hardware level. In addition, aspects of how mission-critical requirements can be better supported by hardware transitions and how cooperative hardware transitions can be used to adapt INP functions across multiple INP platforms also play an important role. The approaches developed in D2 enable diverse collaborations with different subprojects in Phase III from all project areas. To make the technology developed in D2 usable by other subprojects, the MAKI-wide testbed will be extended to include the corresponding INP functionality.