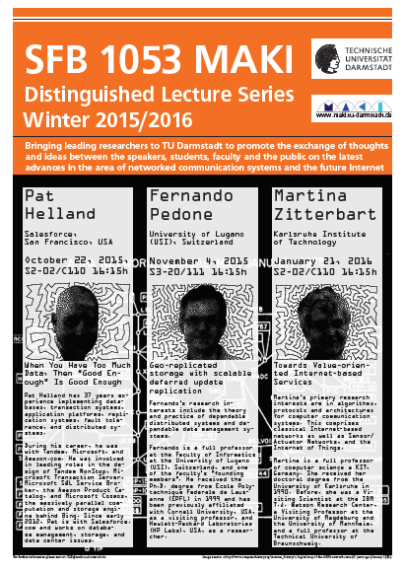

Pat Helland

Salesforce.com Inc., San Francisco, USA

22 October 2015, 04:15 – 05:15 pm

“When You Have Too Much Data, Then “Good Enough” Is Good Enough”

Venue: S2|02 room C110 (Robert-Piloty building, Hochschulstr. 10, 64289 Darmstadt)

Abstract:

Classic database systems offer tight consistency of data with a well-defined metadata or DDL. Recently, it has become clear that this is only a subset of the ways data is represented. We see transactions relaxed, schema is either loosened up or sometimes not present at all. Data from one system may be shoehorned into another system with incomplete fidelity and data loss.

Sometimes, data arrives over time and the query exists before the data arrives. Sometimes, data is kept in many replicas and updates to one replica are accepted and then squirted around the network to bump into other replicas. We see systems in which patterns of observations about data are processed and offer ever increasing understanding of the relationships between objects.

Finally, we observe that by keeping lots of data from lots of places you simultaneously achieve ever increasing knowledge with ever decreasing precision. This is the inevitable consequence of having too much data.

Short Bio:

Pat Helland has 37 years experience implementing databases, transaction systems, application platforms, replication systems, fault tolerance, and distributed systems.

During the 1980s, Pat was Chief Architect of the Tandem NonStop's TMF (Transaction Monitoring Facility), the transaction processing and recovery engine behind NonStop SQL.

He started working at Microsoft in 1994 and drove the design and architecture for MTS (Microsoft Transaction Server), the N-tier transactional computing environment for Windows as well DTC, the Distributed Transaction Coordinator. A few years later, Pat led the development of SQL Service Broker, a high speed exactly once transactional messaging system.

From 2005 to early 2007, Pat worked at Amazon on the Product Catalog. In 2007, he returned to Microsoft working on a number of projects including adding indexing and affinitized placement of data into Cosmos, the massively parallel computation and storage engine behind Bing (Big Data). Cosmos supports exabytes of data running on hundreds of thousands of computers. He was one of the original architects for the real-time event driven transactional engine for Cosmos.

Since early 2012, Pat has worked at Salesforce.com on database management, storage, and data center issues.